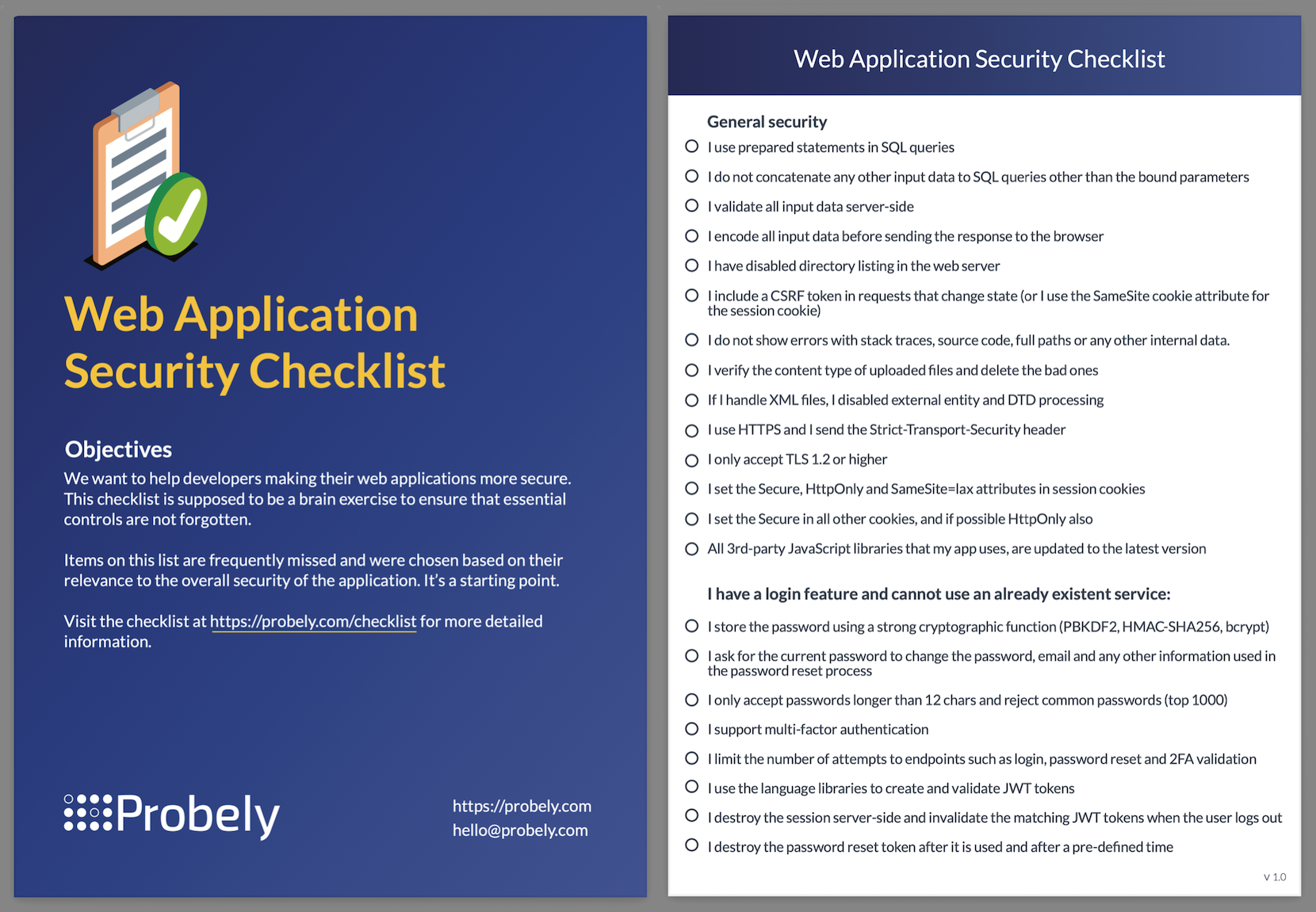

Web Application Security Testing Checklist

Recently, we created a checklist, a Web Application Security Checklist for developers. Why?

Well, because we want to help developers avoid introducing vulnerabilities in the first place. And for that, the security development process should start with training and creating awareness. Searching for vulnerabilities with a web scanner is essential, but we should always try to make security shift left, i.e. place it at the beginning of the development lifecycle. It is an investment: instead of being reactive, invest in prevention.

So, to start with a checklist seems like a good idea. They are incredible tools: they are concise, easy to read and give you actionable items.

We tried to make sure ours meets those requirements.

Another advantage of a checklist like this is that it can be printed in a postcard format and distributed to your developers. Maybe they notice it laying around on their desk and, from time to time, they take a look at it and check if all items are accounted for.

We also published it on GitHub, making it easier for you to keep track of updates.

What to include?

The choice of including an item or not in the checklist is debatable. We are sure we left important stuff out, but the list is a dynamic thing, and it will improve over time.

Our checklist is organized into two parts. The first one, General security, applies to almost any web application. The second one is more relevant if your application has custom-built login support, and you are not using a third-party login service, like Auth0 or Cognito.

We will try to explain the reasoning behind each item on the list. You might notice that some items are in the list because the underlying vulnerability is really dangerous, like SQL injection. Others are not that common but are usually easy to exploit, and their impact is likely very high. Some others may not pose an immediate danger, but are frequently seen in the wild, and contribute negatively to your security posture image.

General security

I use prepared statements in SQL queries

SQL injections occupy the first place in OWASP Top 10 for a good reason: they are relatively common, easy to exploit and the impact is high. That is why we put it in the first place too.

Nowadays, you might not even see SQL in your code: it is all hidden underneath a nice ORM, and we hope it uses prepared statements. But if you find yourself building SQL, ensure you use your programming language’s functions to bind the input to the right part of the query. These functions usually have bind in their name and use the character ? (question mark) as a placeholder for your input/variables.

I do not concatenate any other input data to SQL queries other than the bound parameters

Do not build SQL queries by concatenating strings, particularly strings that contain user-controlled input. The only safe place to use strings that include user input is in the bound parameters, which will then replace the ?in the query.

This item is distinct from the previous one: you might be using prepared statements, but that does not protect you if you still concatenate input with the parts of the query that do not have bound parameters, such as the order by clauses, in MySQL.

SQL injections are really dangerous, so we included two items about them.

I validate all input data server-side

Input from the user must be validated at least server-side to prevent attacks where the client-side validation if any, is bypassed. Web applications frequently have JavaScript code that prevents the user from entering unexpected values but that code can easily be disabled within the browser itself, without any special tool.

Users can also directly modify any value in the URL and virtually any part of the HTTP request. Validations on the server are the only line of defense that the user cannot disable or easily bypass.

Validating only on the client-side is a common mistake. The consequences are context-dependent: imagine a shopping cart where the price is a hidden parameter on the page and the attacker changes it to a tenth of the price. Or to zero.

I encode all input data before sending the response to the browser

Cross-site scripting, or XSS, occurs when a malicious input is echoed back to the browser by the server, without being correctly encoded. What this means is that if I visit the following URL

https://example.com?greeting=Victim<script>alert(27)</script>

and the greeting parameter value is placed in the response verbatim, the JavaScript in there will likely execute and deliver the “attack”. In the most common scenario, the server would have to encode the tag delimiters with HTML entities. The right encoding will depend on where the input is placed on the web page.

XSS is at seventh place in the OWASP Top 10, due to its prevalence: “found in around two-thirds of all applications”. A lot of languages and frameworks have to template systems that make it harder to introduce XSS, but they are still pretty common.

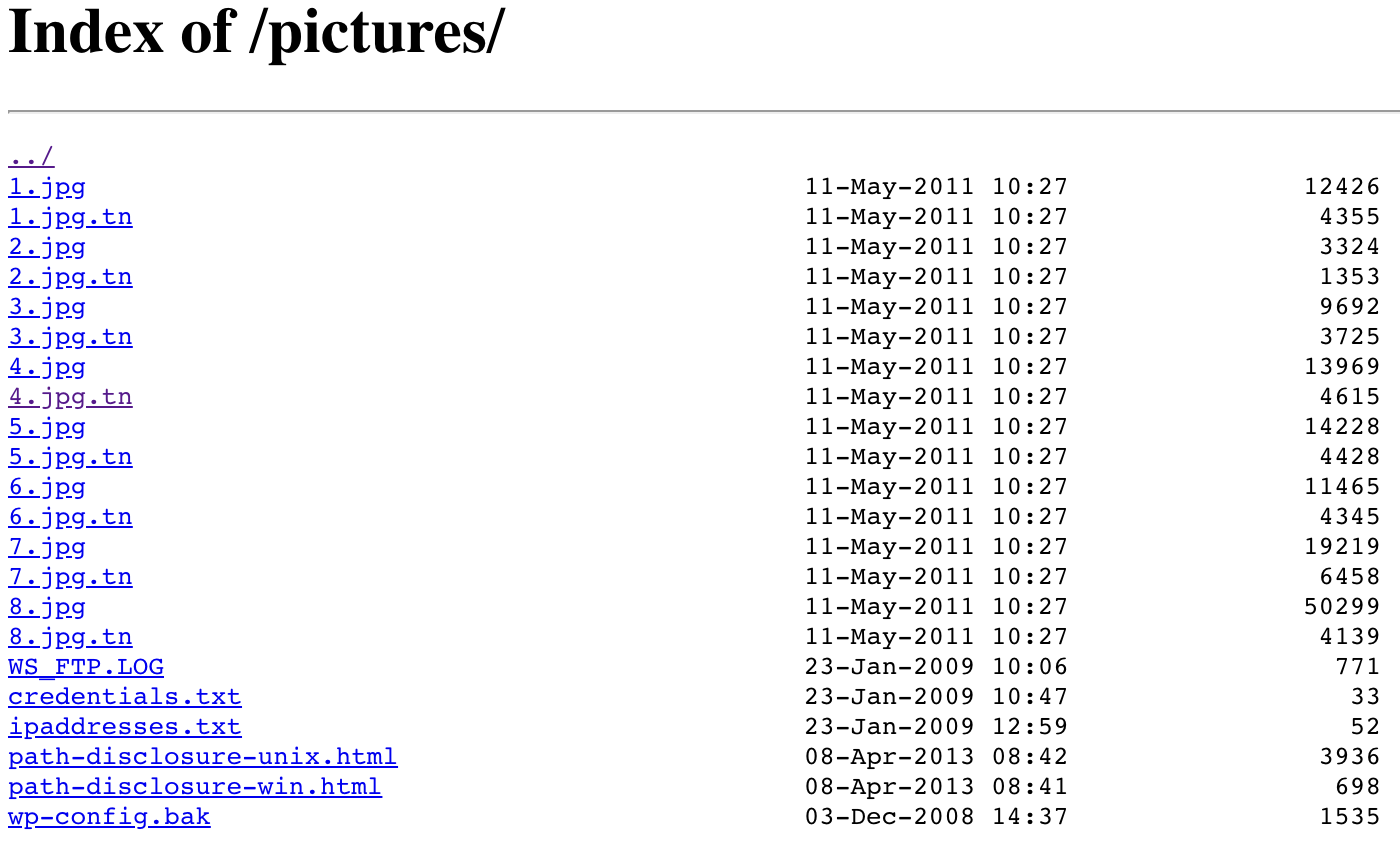

I have disabled directory listing in the web server

Have you seen pages like these before?

Directory listing is a web server feature that lists directory contents in a ‘browsable’ way. It is also known as Indexes. Enabled by default in some web servers, it helps the attacker find available files for him to investigate without the need to guess their path. It will also help search engines index the content that should not be exposed, making it available through a well-crafted search.

Despite being very easy to disable with a single configuration line, it is frequently enabled on many servers. It is important to ensure such a basic attack is not possible, thus the reason for the inclusion in our list.

I include a CSRF token in requests that change state (or I use the SameSite cookie attribute for the session cookie)

CSRF, short for Cross-Site Request Forgery has been removed from the latest OWASP Top 10 because CSRF defenses are now included in many frameworks, making it a less frequent problem. We have space for more than 10 items, and CSRF consequences can be devastating, so we decided to include it. The impact is application dependent, but if you imagine an attacker accessing your account and acting as it pleases, you get a glimpse of what it can do.

A recent cookie attribute, SameSite, further helps to mitigate CSRF by preventing the affected cookie from being included in requests triggered by a third-party site, a pre-condition for any CSRF attack. This is a much simpler solution than building a CSRF defense, so it is perfect for applications not using a framework, and as an extra safeguard for the ones using a framework that has the defenses built in.

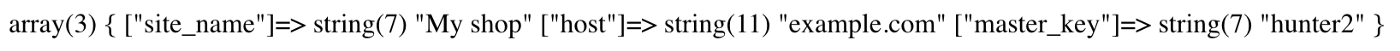

I do not show errors with stack traces, source code, full paths, or any other internal data.

Verbose errors are a widespread problem that hackers love. Security professionals also like them, but only when wearing a tester hat. They can give a wide array of details about the internals of the application: which functions are being used, source code excerpts, and even configurations or secrets.

The application should be configured to not output any error or debug like this to the client.

I verify the content type of uploaded files and delete the bad ones.

Allowing users to upload files to your web application can be a dangerous feature. We are accepting a file that is completely controlled by the user and (normally) stored on your filesystem next to your application files. And you need to serve the file back to the user(s).

One attack starts by uploading a file with some malware inside. If you publicly serve this file from your domain, you can have the domain blocked by Google Safe Browsing, effectively causing a Denial-of-Service. Another attack involves executing code contained in the uploaded file, possibly running arbitrary code in your server, or in a victim’s browser context.

You should always verify the content of uploaded files. At the minimum, verify the file magic numbers, that identify their content type. Ideally, you should also scan the file for malware, with VirusTotal.

If I handle XML files, I have a disabled external entity, and DTD processing

XML content can contain references to external entities, which are fetched and replaced during XML parsing, like a variable pointing to a file, for instance. A well-crafted input that reaches an insecure XML parser might be able to read files from the server and exfiltrate their contents to a server controlled by the attacker. This vulnerability is called XML External Entity (XXE) and was added to the OWASP Top 10 in 2017. Despite being recent in the top 10, these vulnerabilities are not new. But they are not trivial to find and explore in a classical black-box testing approach, so it is better to play it safe and disable external entity and DTD processing when building/using the XML parsing library.

I use HTTPS and I send the Strict-Transport-Security header

Using HTTPS is now a widespread practice, but some sites still insist on being démodé by using insecure cleartext communications, subject to malicious eyes and hands. A lot of initiatives are accelerating HTTPS adoption, such as browsers warning users when the visited site has no HTTPS. Despite that, some resist or have it badly configured, for instance, lacking a redirect from HTTP to HTTPS, to ensure the user won’t inadvertently continue browsing without the communications being encrypted.

The HTTP Strict Transport Security header, or HSTS, solves the missing redirect problem while providing some more useful security features. Despite being a header, and trivial to configure, a lot of sites still don’t use it. It needs to be omnipresent to ensure that unencrypted communications are never seen again.

I only accept TLS 1.2 or higher

You might have HTTPS enabled, but is it properly configured? Currently, we recommend TLS 1.2 and TLS 1.3 only. All other versions have vulnerabilities that can be used to compromise your connections or have a fragile design that leaves many security researchers uncomfortable. Unless your website has to cater to very old devices, there are a few reasons not to use modern TLS configurations.

Support for TLS 1.2 is pretty widespread. Unfortunately, older versions of SSL and TLS are still being supported also, likely for compatibility with older devices. Modern web browsers are usually secure, since they try to use higher versions of TLS, and most browsers auto-update themselves.

I set the Secure, HttpOnly, and SameSite=lax attributes in session cookies

You are not immediately vulnerable to an attack if you do not have these attributes in your session cookies. These attributes were designed to prevent some attacks and reduce their strength/impact. But do not underestimate their importance! Having them set, particularly in session cookies, will protect you in case you have other vulnerabilities, like missing CSRF protection or content being requested in HTTP in an HTTPS site (mixed content).

Setting them is easy, and the benefits are great, but we still find a lot of web applications without them.

I set the Secure attribute in all other cookies, and if possible HttpOnly also

The previous item was focused on session cookies, i.e. the ones that can replace your username and password after the initial login step. Ideally, the previous recommendations should be applied to every cookie, as some of them may contain sensitive data, such as other tokens or private information from the user.

Setting the Secure attribute should come with no side effects since your site supports HTTPS, right? On the other hand, it might not be possible to set HttpOnly on all cookies, as many of them are likely used by JavaScript to set some user preference, such as the site language.

We decided to split the “cookie attributes” recommendations into two items because we want to emphasize how important it is to protect your session cookies.

All 3rd-party JavaScript libraries that my app uses are updated to the latest version

Using Components with Known Vulnerabilities has been in the OWASP Top 10 for some time now, and we don’t think it’s going away anytime soon. Reliance on third-party libraries will likely increase, as more of them will be available to ease the programmer’s work. The biggest problem is maintenance: libraries are often created by someone who had a problem to solve, fixed it, and doesn’t really want to provide updates forever. Unless the developer is being paid to do this or has a team helping her, which is rare.

Eventually, someone will find a vulnerability in a library you use, and you will need to update it. Are updates available? Does the update break your site? Keep this in mind during the lifetime of the application. You can minimize potential issues with third-party libraries by keeping up with recent versions and testing them often. If possible, use a well-maintained library, with a good community. Easier said than done though.

I have a login feature and cannot use an already existing service

You want to support different accounts in your application. For that, you need an authentication system and a login process. At a glance, it might seem easy to implement: it is just about getting the username and password, and checking if they match against credentials stored in a database, right? Not really.

There are many things you need to implement to ensure that the process is safe. It’s best to just use a proven third-party authentication provider or an existing authentication framework.

For third-party services, we recommend using (in no particular order) OAuth from Google, Auth0, or Incognito from AWS, just to name a few.

If you cannot use third-party authentication services, we suggest you use the authentication/authorization features provided by your web application development framework. Since it is such a common requirement, frameworks usually have authentication built-in, which is more likely to have been audited. If not, sometimes a popular, well-maintained, authentication module is available. Try to use that, if possible.

I store passwords using a strong cryptographic function (scrypt, argon2, bcrypt, or PBKDF2)

Here be dragons.

Do not store passwords in cleartext. Do not store passwords hashed with algorithms like MD5, SHA2, or SHA3. Even if using a per-user salt. It is not enough. Generic cryptographic hashing algorithms are built to be fast. Password-hashing algorithms are built to be as slow as users can tolerate.

Sadly, we continue to see news about sites that were breached, and thousands or millions of plaintext passwords were leaked, along with other data. Sometimes, affected sites say “don’t worry: we store only password hashes”. That means very little, unfortunately.

If you really must store credentials, make them go through appropriate password-hashing functions like scrypt, argon2, bcrypt, or PBKDF2. These will make the process of obtaining your passwords harder, and will protect your users in case your database is compromised.

In addition to this, you can also use a pepper. A pepper is a secret that you use to provide an additional security layer to your passwords. One common strategy is to use HMAC-SHA256 on the result of the password-hashing function with a secret (pepper) that only your service knows. Do not store the pepper on the database, as a SQL injection would allow an attacker to extract it and render the scheme useless. Store the pepper in a configuration file, for example.

I ask for the current password to set a new password, email. or any other information used in the password reset process

Asking for the current password before doing any of these changes may prevent other attacks, such as those conveyed through session hijacking, either through an XSS or because someone left the computer unlocked. It also prevents a CSRF to those features in case you don’t have any CSRF-specific protection.

An email change (login) mechanism vulnerable to CSRF, that does not ask for the current password, is a huge risk: the victim can completely lose access to its own account, possibly forever, unless there is human intervention, or an additional account recovery mechanism.

I only accept passwords longer than 12 chars and reject common passwords (top 1000)

Having to remember a lot of passwords is something that people do not like, so they use the same short and memorable password (almost) everywhere. Passwords such as qwerty, password and iloveyou have the above characteristics, and all of them are bad.

It is trivial for an attacker to grab a list of the top 100 most used passwords and try them with your email. Even if your password is not in that list, but it is short enough, it might still be possible to brute-force it, trying all passwords starting with the minimum number of characters the site allows, until your 6-character password is found.

Most people will continue to pick bad passwords if they are allowed to do so. It is up to site owners to enforce rules that prevent that, such as a minimum of 12 chars and rejecting passwords that are too common, i.e. too guessable.

Much can be said about this topic. NIST Special Publication 800–63B provides a ton of great insight. Please check it before defining a password policy.

I support multi-factor authentication

Multi-factor authentication sometimes referred to just as 2FA — two-factor authentication, requires the user to provide some other credential when authenticating, in addition to the username and password pair. Frequently, this 2FA is a code obtained through an SMS or an OTP generator. If possible, avoid SMS.

Providing multi-factor authentication to your users improves their protection against credential theft through database leaks, and phishing (if using U2F), keyloggers, and other attacks. 2FA gives you, the site owner, much-improved sleep: in the unfortunate event that your user database is breached, and user passwords are cracked, an attacker won’t be able to log in to those accounts, without the second authentication factor. Unless you are storing multi-factor secrets in the database in cleartext. Remember to encrypt them, or store them elsewhere :)

Multi-factor authentication is now seen as a requirement for some categories of sites, such as social media, sites that have a lot of personal data, or B2B web applications. If you have questions about which 2FA method to provide, choose U2F (best), TOTP, or SMS (try to avoid it), in that order.

I limit the number of attempts to endpoints such as login, password reset, and 2FA validation

Allowing short and well-known passwords while allowing an unlimited number of login attempts is asking for trouble. Limiting the number of attempts that can be made to the authentication-related endpoints is critical to ensure your users are safe. Even if, for some reason, you have users with weak passwords, having limits will raise the bar for the attacker, hopefully, high enough for them to quit.

The implementation details and the number of rules can increase dramatically for higher-risk sites, but for most of them, it would be enough to start with limiting the number of attempts per IP address.

I use the language libraries to create and validate JSON Web Tokens (JWT)

JWT usage is on the rise, as a mechanism to authenticate and authorize users in web applications. JWT has a simple structure so a programmer might be tempted to create and validate them by hand, with their own code. Some libraries can do that for you, without the risk of introducing a vulnerability when validating the token. Just look up which JWT libraries are available in your language and pick one that’s actively maintained.

I destroy the session server-side and invalidate the matching JSON Web Token (JWT) when the user logs out

It is surprisingly frequent for sites to not destroy the session server-side after the user asks for a logout. The risk is not very high since it will likely require physical access to the victim’s computer. Another attack vector begins with session hijacking (for instance with an XSS) which is then very hard to deal with since a logout by the victim will not kill the stolen session.

In addition, to destroy the session in the server, you must also invalidate any JWT that you have associated with that session, or else it can be used instead of cookies to make requests.

I destroy the password reset token after it is used and after a pre-defined time

When you trigger a password reset process, you will likely receive an email with a unique URL. You click it and you can set the new password for your account. This makes that URL really important: whoever gets hold of it can define the password for your account and lock you out.

To reduce the risk, that URL should be valid for a short period. Once used, it must be invalidated. Fortunately, this is a common behavior, and it is not common to see an application that does otherwise but it is yet something more you must take into consideration when developing your own login functionality.

Next version?

We challenge the reader to provide insights on what we should include or remove in the next update.

We intend to update this checklist periodically, at least once or twice in the year as technologies and vulnerabilities change. Or if any mistake is found :)

If by any chance you meet us at a security event, ask us for a few copies, we always travel with a bunch of them, and your developers will appreciate the “swag”.